We Don't Yet Understand ChatGPTs Potential

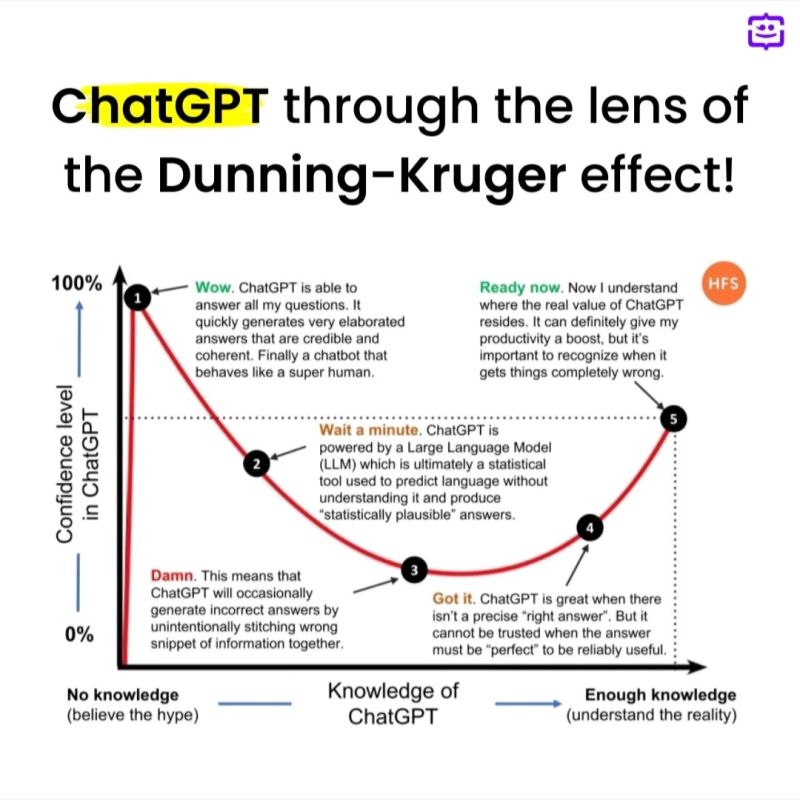

I recently saw a Twitter post where a user described ChatGPT as the most powerful AI tool out there. Sure, it's clickbait (and misleading) but I want to explore how people can overestimate the potential of new technology. This leads to massive investment, media attention and hype before people's expectations decline to a more realistic level. In this article, I will use the Dunning–Kruger effect to show that people generally start off overconfident in their ability (to the potential of new technology) before truly understanding something new. Let's apply this to people's responses to the power of ChatGPT. Image source HFS.

ChatGPT and the Dunning-Kruger Effect

Artificial intelligence is rapidly advancing, and one area where we are seeing significant progress is in natural language processing. One example of this is ChatGPT, a language model developed by OpenAI that is capable of carrying on a conversation with a user. However, as with any new technology, there is a risk of overestimating its power and capabilities. This phenomenon is known as the Dunning-Kruger effect, a cognitive bias in which individuals with limited knowledge or skills in a particular area overestimate their abilities and believe they are more competent than they actually are. In this article, we will explore the Dunning-Kruger effect in relation to ChatGPT and how people's perceptions of the technology can change over time.

The Dunning-Kruger Effect in Action

The Dunning-Kruger effect can manifest in different ways when it comes to ChatGPT. Some people may overestimate the technology's capabilities and assume that it can provide accurate and comprehensive answers to any question, regardless of complexity or nuance. They may believe that ChatGPT can fully understand the emotional context of a conversation and provide appropriate responses, even though the technology is not yet sophisticated enough to do so reliably.

On the other hand, some people may be skeptical of ChatGPT and dismiss it as a gimmick or a toy. They may assume that the technology is only useful for trivial tasks, like answering trivia questions or providing weather updates, and not capable of handling more complex or meaningful conversations.

However, research suggests that ChatGPT is capable of much more than simply answering basic questions or providing scripted responses. A study by MIT found that people were more likely to be honest and open about their thoughts and feelings when interacting with a chatbot compared to a human. This indicates that chatbots like ChatGPT have the potential to be valuable tools for mental health counseling and other areas where people may feel hesitant to share their experiences with a human.

Appreciating the Strengths and Limitations of ChatGPT

As people gain more experience with ChatGPT and develop a more nuanced understanding of its strengths and limitations, they will likely become more realistic in their expectations and evaluations of the technology. For example, someone who uses ChatGPT to help them with a customer service issue might initially be impressed with the technology's ability to generate quick and helpful responses. However, they might also encounter situations where ChatGPT struggles to understand their issue or provide a satisfactory solution.

Over time, they will likely develop a better sense of when and how to use ChatGPT effectively, and they may also become more appreciative of the ways in which the technology can supplement but not replace human interaction.

A report by Deloitte suggests that chatbots and other conversational AI technologies will become increasingly prevalent in the years to come. However, the report also emphasizes the importance of using these technologies ethically and responsibly. This means ensuring that they are transparent about their limitations, protecting users' privacy and data, and avoiding biases or discrimination.

Ultimately, by recognizing and embracing the benefits and limits of ChatGPT, we can harness its potential in ways that are both effective and ethical. This requires a willingness to learn and experiment with the technology, while also being mindful of its limitations and potential drawbacks.

Conclusion

ChatGPT is a powerful tool with the potential to revolutionize the way we communicate, but it is not a magic bullet that can solve all our problems or replace human connection entirely. By understanding and addressing the Dunning-Kruger effect in relation to ChatGPT, we can develop a more realistic and nuanced understanding of the technology, appreciating its strengths while also being aware of its limitations. As we continue to develop and refine chatbots like ChatGPT, it is important to approach their use with transparency, responsibility, and ethics. By doing so, we can leverage these powerful tools to enhance our communication and improve our lives in meaningful ways. As we move forward into an increasingly digital and AI-driven world, our ability to navigate the intersection of technology and human interaction will be crucial, and the lessons we learn from our experience with ChatGPT can provide valuable insights into how we can do so effectively.

References:

Dunning, D., & Kruger, J. (1999). Unskilled and unaware of it: How difficulties in recognizing one's own incompetence lead to inflated self-assessments. Journal of personality and social psychology, 77(6), 1121–1134. https://doi.org/10.1037/0022-3514.77.6.1121

OpenAI. (2022). ChatGPT. https://beta.openai.com/docs/guides/chat-gpt/

Laranjo, L., Dunn, A. G., Tong, H. L., Kocaballi, A. B., Chen, J., Bashir, R., Surian, D., Gallego, B., Magrabi, F., Lau, A. Y., & Coiera, E. (2018). Conversational agents in healthcare: A systematic review. Journal of the American Medical Informatics Association, 25(9), 1248–1258. https://doi.org/10.1093/jamia/ocy071

McDowell, A., & Goward, P. (2019). The Ethics of Chatbots in Healthcare: A Principled Review. Health Informatics Journal, 25(4), 1494–1504. https://doi.org/10.1177/1460458219835424

Bain & Company. (2019). AI Transformation: The Next Chapter in Customer Experience. https://www.bain.com/contentassets/a07e10cfc9fd44c18a01446b06f96175/bain-report_ai-transformation.pdf

Deloitte. (2019). Conversational AI: Building the future of customer engagement. https://www2.deloitte.com/content/dam/insights/us/articles/4789_Conversational-ai/DI_Conversational-AI.pdf

Marlow, C. A., Lacerda, F., Bonacin, R., & Adaba, G. (2019). Is the chatbot ready for prime time? Using a mixed-method approach to evaluate the user experience of a chatbot for mental health counseling. Journal of Technology in Human Services, 37(2), 139–155. https://doi.org/10.1080/15228835.2019.1572775

Pereira, J. C., & Bertrand, F. (2019). Understanding the limits of AI. Harvard Business Review. https://hbr.org/2019/03/understanding-the-limits-of-ai

Weizenbaum, J. (1966). ELIZA—A computer program for the study of natural language communication between man and machine. Communications of the ACM, 9(1), 36–45. https://doi.org/10.1145/365153.365168